8. Monitoring & Evaluation of Transboundary MSP

Monitoring and evaluation lies at the heart of good practice to any MSP process to measure whether or not goals and objectives are being met. This step is also important for improving and adapting MSP during the “next generation” MSP so that changes, both internal and external to the MSP project, can be incorporated, as well as lessons learned from the previous “generation.”

Even though it is often placed at the end of a planning cycle, the actual design of the appropriate framework for evaluation should be developed at the very beginning of a planning cycle (see example 8.1.1). Monitoring can only be done well if objectives are clearly set as part of the logical framework analysis process during the MSP project design stage (see 3.3), and potentially subsequently when more specific objectives are set for actual planning, following the analysis and clarification of specific issues.

The “logical framework matrix” (European Commission 2004) implies that objectives are set against a given number of set assumptions, and that a limited number of objectively verifiable indicators are determined early on. These indicators are not only relevant to the determined objectives, but they can also be measured in an easy and cost-efficient format. A pre-condition for a good evaluation is that these target indicators are set against a baseline of current conditions, as measured at the beginning of the process. Further discussion of baseline conditions is included in the Stakeholder Participation Toolkit Chapter 4.2.3.2 Gathering and sharing baseline information. The clearer the objectives and desired outcomes of the given transboundary MSP process, the easier it is to develop appropriate evaluation criteria (see example 8.1.2).

It is therefore recommended to carry out a logical framework analysis process covering objective setting (including overall objectives, project purpose, deliverables, and assumptions) as well as related indicators as part of the overall project design. If done as a participatory process among the whole project team involved, this may also be an important point for clarifying the actual purpose and design of the project and thus act as a group bonding exercise for the transnational partner group (see 3.4.5 for more on this topic). The resulting logical framework can/should subsequently also be shared among the main stakeholder groups, as a way to clarify and manage stakeholder expectations for the given process. Further discussion on including stakeholders in monitoring and evaluation can be found in the Stakeholder Participation Toolkit Chapter 4.2.4 PHASE 4: Working with Stakeholders on Monitoring and Evaluations.

This analysis should be repeated over the course of the MSP process - in particular, at the transition period moving from the analytical stage to the planning stage.

At current stage of MSP development, almost all monitoring and evaluation frameworks for transboundary MSP processes have focused on indicators related to the plan-making process, rather than the actual results and outputs of a planning process, i.e. change caused by it; let alone evaluation of plan implementation or plan impact.

Typical indicators and criteria have evolved for:

•Size of the area and number of sectors analysed and planned (not necessarily meaning implemented plans);

•Number of issues identified and analysed;

•Capacity built among the multi-disciplinary team;

•Number of stakeholders engaged in the process and willing to stay engaged;

•Number and quality of contributions from stakeholders; and

•Number of users for MSP databases.

Some of these indicators may overlap with those included in the ecosystem-based 5-module approach (see the Strategic Approach toolkit Chapter 3). A set of indicators which can be adapted in relation to specific MSP objectives is included in the LME Scorecard.

In some instances, subsequent increase of political will or even agreement on guidelines were selected as indicators to measure achievement of overarching objectives. The key resource described in 8.1.3 provides more detailed information on developing indicators and criteria, as well as further examples.

As the practice of MSP matures and more initiatives transition to implementation of policies, rules and procedures called for in a plan, it becomes important to identify and track the actual changes in human and institutional behaviour that the MSP provisions have been designed for. Whereas many external factors may play into higher-level MSP goals (e.g. blue growth, increased ocean energy, more aquaculture, increased fish stocks, healthier ecosystems); projects should dare to clearly delineate what their contribution will actually be (e.g. increase in sustainable fishery techniques applied in the given area; reduction of licensing time and costs for new maritime activities; decrease of legal disputes; increase of sustainable maritime activities). In order to increase the number of stakeholders and politicians engaged in such a process; it is time that MSP Planners are also displaying more confidence in the actual propositions of what MSP processes can be designed for.

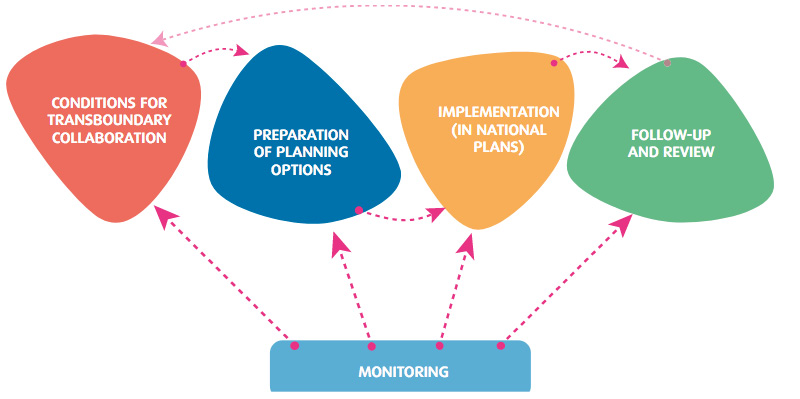

8.3.1 EXAMPLE: Evaluation framework for trans-boundary MSP (Baltic SCOPE)

The framework suggests a generic methodology to evaluate trans-boundary aspects of MSP. It is a bottom-up evaluation method as alternative to ready-made evaluation frameworks and recommends a theory-based approach to anticipate and later test why an intervention produces intended and unintended effects, for whom and in which contexts, as well as what mechanisms are triggered by the intervention and in which contexts.

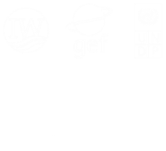

The framework developed criteria (13) and indicators (65) for the following five topics: a) preparation of the plan, b) outputs of trans-boundary agreements, c) outcomes, d) follow-up and evaluation and e) cross-cutting themes. The following table provides examples of plausible theories of change for trans-boundary collaboration in MSP:

Figure 49: Table of cause and effects of transboundary MSP (BalticSCOPE 2017)

The approach can help in planning trans-boundary collaboration and answer questions like

•What can be the expected results?

•What are possible time-spans?

•What are the most likely difficulties in achieving the results?

A set of evaluation criteria and respective indicators are necessary for a systematic and transparent evaluation which is presented as lists of criteria and indicators in the annex of the report (lxii). The list has been developed based on literature on the evaluation of MSP and especially based on interviews and observations conducted during the Baltic SCOPE project. Input for making the framework has also been collected from project partners in two working groups that were organised during the project.

The set of criteria and indicators is structured into five categories:

Figure 50: Topics and foci of the evaluation framework (BalticSCOPE 2017b)

However, the methodology was not used in statutory MSP processes yet. During the process, it became apparent how important stakeholder engagement can be when dealing with different languages and motivating stakeholders to participate.

8.3.2 EXAMPLE: Quality checklist for transboundary MSP processes (TPEA)

A framework for evaluating the conduct and outcome of transboundary MSP in two pilot areas was developed as part of the TPEA process. It sets out to answer the following questions:

•What is to be evaluated?

•When should evaluation be carried out?

•Who should evaluate?

•How are results to be presented?

•Who should be responsible for spatial data collection?

•What resources are needed?

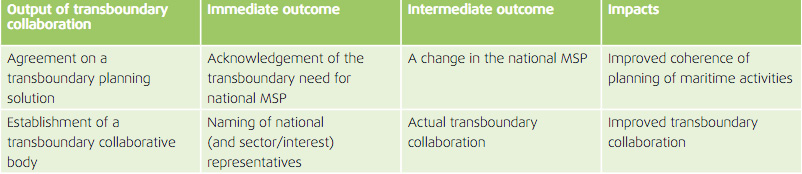

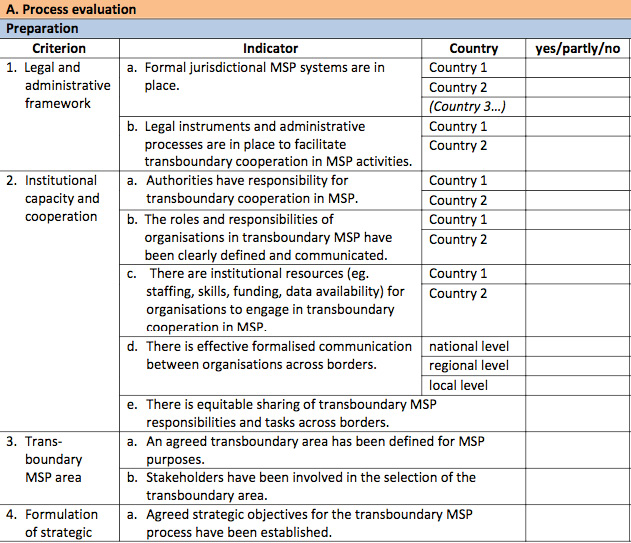

Evaluation criteria and indicators were outlined which cover a range of institutional and spatial issues and follow the logic of the TPEA process diagram:

Figure 51: TPEA process diagram (TPEA 2015)

Given the main project objective to develop recommendations for a transboundary approach to MSP in two pilot areas, the TPEA evaluation framework mostly focuses on “evaluation of the plan-making process.” The indicative TPEA quality checklist for transboundary MSP processes covers preparatory steps, definition and analysis of the trans-boundary area, planning and communication, as shown here:

Figure 52: An extract of the indicative TPEA quality checklist for trans-boundary MSP processes (TPEA 2015)

The quality checklist has been applied and tested in the two pilot areas (Irish Sea and Gulf of Cadiz). Ideally the checklist is used at regular intervals beyond the lifetime of TPEA. Each country involved in a transboundary exercise should fill in the checklist, either in a collaborative process or individually with subsequent discussion of results. The indicative evaluation checklists should be understood as flexible instruments, which can be expanded and adapted according to need. Data for the pilot areas themselves as well as transboundary data is needed to work with the checklists. Evaluation is a continuous or periodic process, which should be carried out as part of regular meetings using the adaptive process checklist of TPEA, which is less resource intensive than a formal review process. However, it is challenging to evaluate resource intensity, taking into account cost effectiveness and deciding whether to use the entire checklist or relevant sections only.

8.3.3 KEY RESOURCE: Indicator Development Handbook

(MSP for Blue Growth Study)

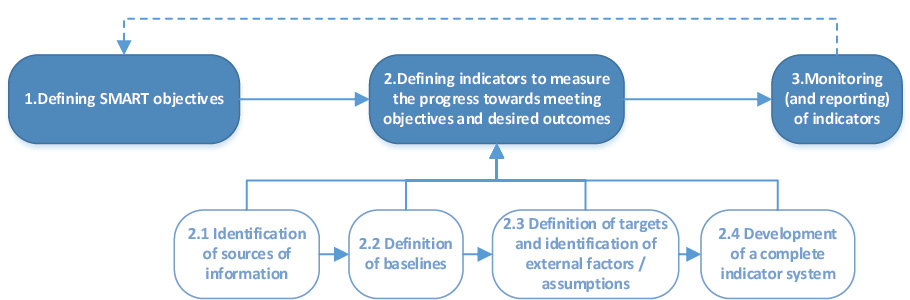

The MSP indicator development handbook is a guidance document developed to assist policy makers and stakeholders’ in their decision-making processes of blue growth development. The handbook provides an overview of the indicator development process, detailed descriptions of the role of indicators in the MSP cycle and a process description for the development of indicators.

The handbook has been designed to help experts develop MSP indicators that are context and objective specific, using a systematic 3 step approach. The first step is to define SMART (Specific, Measurable, Attainable, Realistic and Time-bound) objectives (please see 3.3 for more definitions) that are scale and context specific for an identified blue growth project. Developing indicators involves source identification, defining baselines and targets as well as external factors that may influence output. This enables the development of the indicators to later conduct monitoring and evaluation to assess whether expected results are delivered.

Figure 53: Indicator development process (European Commission 2018)

Once SMART objectives are defined, the following elements are needed to develop corresponding indicators: an indicator title, measurement unit, MSP dimension, indicator type, baseline value, information source, calculation method, reporting and communication arrangements, among others.

This approach can help develop efficient and concise blue growth and MSP projects. The indicators are objectively project based, but also allow for flexibility of use in different projects by adapting the same approach in different cases. Nonetheless, the approach faces limitations in its composition and use, since it is a small part of a complex MSP decision making system. Therefore, the limited one-to-one matches between the MSP and the achievement of an objective makes it difficult to select indicators that really determine the success of MSP. The scope of using the indicators is also limited as they can only be interpreted for country and context specific cases.